We’re less than three months away from election day, and candidates are increasing their efforts to convince more Filipinos to add them to their voting list. Establishments have released surveys on which candidate is leading the race, but some concerned voters are asking — are all these surveys we’re seeing really reliable? Which one is credible and which one isn’t?

Should you trust survey results?

Last week, Facebook page SPLAT Communications, which describes itself as an “online resource for information on Sports Personalities Lifestyle Arts Travel”, raised a few eyebrows after some of their questionable “findings” were shared by Philippine Star in a since-deleted social media post. According to the post, it was a “statistical impossibility” that VP Leni Robredo would catch up with presidential aspirant Ferdinand “Bongbong” Marcos Jr.

But a further look into SPLAT Communications made netizens question their legitimacy. SPLAT’s Facebook page created was last November 2021 and has only 208 followers. Some videos on their YouTube channel are titled: “Good Luck, UNITEAM! Leni, Wala Ka Talagang Alam” and “JUST IN! Pwede na Mag-iyakan”. The survey methodologies of the so-called “data analytics firm” are also unclear.

After netizens started calling them out on Facebook, the page did not share their methodology, but edited one of their captions to read: “Work, harder, campaign harder and stop ‘crying’. The more time you spend hating, ridiculing, bullying and ‘crying’ — the less time you spend converting actual supporters/voters to support your candidate, this is critical specially when your candidate is a laggard.”

They also closed the comments section of the post.

SPLAT isn’t alone

SPLAT Communications isn’t the only social media page that has been publishing questionable surveys. Multiple social media pages — from private individuals and fan pages to even universities, established media organizations, and politicians — have been conducting informal election-related surveys on Facebook and Twitter. Though they get thousands of reactions almost every time, these surveys aren’t accurate.

Now, we’re not saying that conducting these surveys is wrong per se. But these social media pages should be clear about their methodologies and include a disclaimer if their data isn’t statistically sound. It’s just the responsible thing to do.

UP School of Statistics speaks up on unreliable surveys

Faculty members of the University of the Philippines School of Statistics have come together to call out the concerning increase of “surveys with unclear methodologies” on social media. They specifically named the “Kalye Surveys” which is incidentally what SPLAT named their survey videos.

“Surveys reveal facts, beliefs, sentiments, and opinions based on a representation of the population,” the statement read. “However, the quality of any inference cannot rise above the quality of the methodology it is based upon.”

In other words, your survey’s findings are only as good as your methodologies. If your methodology is questionable, your results are probably inaccurate.

To voters: Be critical of surveys and their so-called “findings”

The statement advises voters to be critical of surveys and to not immediately accept whatever results are published. Voters need to ask questions to ensure that the survey was done properly and unbiasedly, especially in terms of selecting the sample size for the respondents. Some of the questions you should be asking include but are not limited to:

The 2016 US elections are a good illustration of how forecasts can be inaccurate. During the months leading up to the elections, forecasters predicted that Hillary Clinton had a 70-99% chance of winning over Donald Trump. According to US-based Pew Research Center, this could have been due to respondents not being truthful or not showing up to cast their votes.

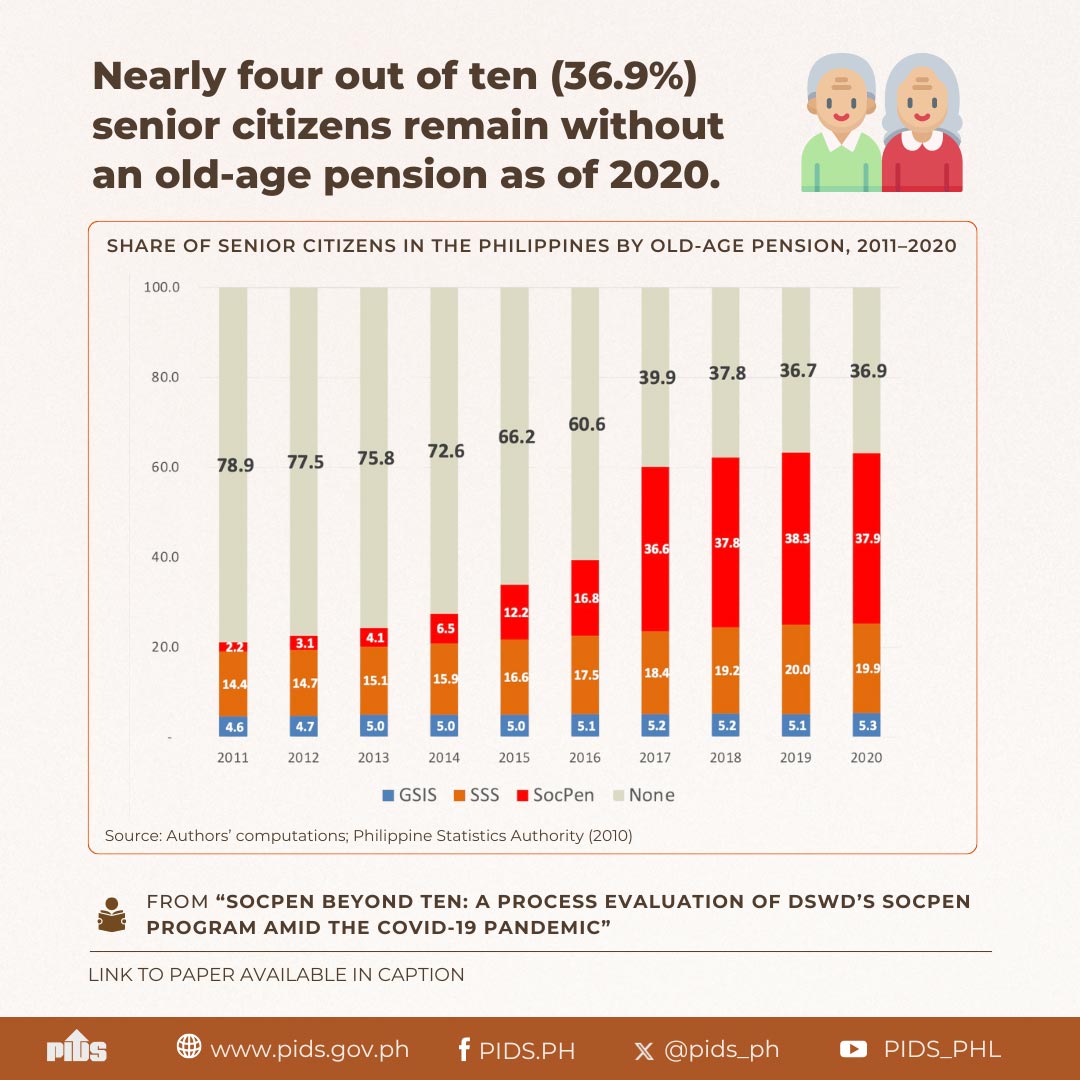

According to statistician Dr. Jose Ramon Albert, a senior research fellow of the Philippine Institute for Development Studies (PIDS), survey results in the Philippines could be affected by different factors, such as:

SPLAT Communications has compared its large number of respondents with other polling bodies. But is a bigger sample size really better?

According to Albert, not really. Opinion polls typically have a sample size of 1,200, and this has a scientific basis. A sample size of 1,200 is enough to achieve the survey’s accuracy, while having a margin of error of 3 percentage points.

Albert added that while a larger sample size would have a smaller margin of error, the cost-benefit analysis of the larger sample size for opinion polls shows that 1,200 is enough of a sample size to show the population’s opinion. He also said that numbers and opinions would continue to fluctuate as the election nears.

So, what does a reliable survey look like, exactly?

If you want to see how a credible institution does an electoral survey, take a look at how Pulse Asia does their surveys.

Random sampling

Pulse Asia’s respondents are chosen randomly. Why? “The general rule before you claim that a survey is representative of the entire population is that the selection of the samples has to be random,” Pulse Asia president Ronald Holmes told ANCX.

Holmes then enumerates the steps Pulse Asia takes in conducting their surveys. First, they determine the sample size — which, back in 2020, was the standard 1,200 (reasoning explained above). Then, they select the areas — through random selection. While NCR cities and municipalities are always included, the randomization comes at the barangay level. They also include participants from the rest of Luzon and Visayas and Mindanao.

After randomly selecting barangays, they would then randomly select where to start from (e.g. public market, school, church) before choosing the households. They use intervals of five households in urban locations and two in rural. Further randomizations are done when they approach the household.

Unbiased questions

Pulse Asia also ensures that their questionnaires are designed without bias. This means “making sure that the questions are not leading, do not condition the responses, are exhaustive (meaning to say, you’ve provided all the choices that one could make), and measures the opinion intended to be measured.”

Transparency

Only when they are sure do their trained interviewers go to the ground and conduct the survey. For each survey, Pulse Asia also releases the data they’ve gathered, along with a detailed explanation of the methodology they use and other factors they considered during the survey implementation. You can find the data through their website.

Go beyond surveys

Surveys are presented to provide voters with an idea of how well the candidates are performing in the race, showing a visualization of the gaps between candidates. But as a voter, you shouldn’t let these surveys completely influence you. Study them, but go beyond them, too. Educate yourself and do your own research. Study the platforms, projects, and promises of each candidate before you finally make a decision in May.

Should you trust survey results?

Last week, Facebook page SPLAT Communications, which describes itself as an “online resource for information on Sports Personalities Lifestyle Arts Travel”, raised a few eyebrows after some of their questionable “findings” were shared by Philippine Star in a since-deleted social media post. According to the post, it was a “statistical impossibility” that VP Leni Robredo would catch up with presidential aspirant Ferdinand “Bongbong” Marcos Jr.

But a further look into SPLAT Communications made netizens question their legitimacy. SPLAT’s Facebook page created was last November 2021 and has only 208 followers. Some videos on their YouTube channel are titled: “Good Luck, UNITEAM! Leni, Wala Ka Talagang Alam” and “JUST IN! Pwede na Mag-iyakan”. The survey methodologies of the so-called “data analytics firm” are also unclear.

After netizens started calling them out on Facebook, the page did not share their methodology, but edited one of their captions to read: “Work, harder, campaign harder and stop ‘crying’. The more time you spend hating, ridiculing, bullying and ‘crying’ — the less time you spend converting actual supporters/voters to support your candidate, this is critical specially when your candidate is a laggard.”

They also closed the comments section of the post.

SPLAT isn’t alone

SPLAT Communications isn’t the only social media page that has been publishing questionable surveys. Multiple social media pages — from private individuals and fan pages to even universities, established media organizations, and politicians — have been conducting informal election-related surveys on Facebook and Twitter. Though they get thousands of reactions almost every time, these surveys aren’t accurate.

Now, we’re not saying that conducting these surveys is wrong per se. But these social media pages should be clear about their methodologies and include a disclaimer if their data isn’t statistically sound. It’s just the responsible thing to do.

UP School of Statistics speaks up on unreliable surveys

Faculty members of the University of the Philippines School of Statistics have come together to call out the concerning increase of “surveys with unclear methodologies” on social media. They specifically named the “Kalye Surveys” which is incidentally what SPLAT named their survey videos.

“Surveys reveal facts, beliefs, sentiments, and opinions based on a representation of the population,” the statement read. “However, the quality of any inference cannot rise above the quality of the methodology it is based upon.”

In other words, your survey’s findings are only as good as your methodologies. If your methodology is questionable, your results are probably inaccurate.

To voters: Be critical of surveys and their so-called “findings”

The statement advises voters to be critical of surveys and to not immediately accept whatever results are published. Voters need to ask questions to ensure that the survey was done properly and unbiasedly, especially in terms of selecting the sample size for the respondents. Some of the questions you should be asking include but are not limited to:

- How was the sample selected?

- What events surround the period of data gathering? (e.g. Was the filing of COCs over? Was this debate over? Had the campaign period already begun?)

- When facing an interviewer, was the respondent interviewed in a neutral yet professional tone? (i.e. Was the interviewer looking for a certain answer from the respondent?)

- What are the control mechanisms implemented to ensure the accuracy of the protocols in data collection?

The 2016 US elections are a good illustration of how forecasts can be inaccurate. During the months leading up to the elections, forecasters predicted that Hillary Clinton had a 70-99% chance of winning over Donald Trump. According to US-based Pew Research Center, this could have been due to respondents not being truthful or not showing up to cast their votes.

According to statistician Dr. Jose Ramon Albert, a senior research fellow of the Philippine Institute for Development Studies (PIDS), survey results in the Philippines could be affected by different factors, such as:

- the questionnaire being too complex

- the respondent not being truthful

- the interviewer influencing the responses of a respondent

- “the information system of a respondent” (e.g. the respondent may be unable to confirm their age due to a variety of reasons)

- “the mode of data collection”

- “the survey setting” (e.g. is it done face-to-face or online?)

SPLAT Communications has compared its large number of respondents with other polling bodies. But is a bigger sample size really better?

According to Albert, not really. Opinion polls typically have a sample size of 1,200, and this has a scientific basis. A sample size of 1,200 is enough to achieve the survey’s accuracy, while having a margin of error of 3 percentage points.

Albert added that while a larger sample size would have a smaller margin of error, the cost-benefit analysis of the larger sample size for opinion polls shows that 1,200 is enough of a sample size to show the population’s opinion. He also said that numbers and opinions would continue to fluctuate as the election nears.

So, what does a reliable survey look like, exactly?

If you want to see how a credible institution does an electoral survey, take a look at how Pulse Asia does their surveys.

Random sampling

Pulse Asia’s respondents are chosen randomly. Why? “The general rule before you claim that a survey is representative of the entire population is that the selection of the samples has to be random,” Pulse Asia president Ronald Holmes told ANCX.

Holmes then enumerates the steps Pulse Asia takes in conducting their surveys. First, they determine the sample size — which, back in 2020, was the standard 1,200 (reasoning explained above). Then, they select the areas — through random selection. While NCR cities and municipalities are always included, the randomization comes at the barangay level. They also include participants from the rest of Luzon and Visayas and Mindanao.

After randomly selecting barangays, they would then randomly select where to start from (e.g. public market, school, church) before choosing the households. They use intervals of five households in urban locations and two in rural. Further randomizations are done when they approach the household.

Unbiased questions

Pulse Asia also ensures that their questionnaires are designed without bias. This means “making sure that the questions are not leading, do not condition the responses, are exhaustive (meaning to say, you’ve provided all the choices that one could make), and measures the opinion intended to be measured.”

Transparency

Only when they are sure do their trained interviewers go to the ground and conduct the survey. For each survey, Pulse Asia also releases the data they’ve gathered, along with a detailed explanation of the methodology they use and other factors they considered during the survey implementation. You can find the data through their website.

Go beyond surveys

Surveys are presented to provide voters with an idea of how well the candidates are performing in the race, showing a visualization of the gaps between candidates. But as a voter, you shouldn’t let these surveys completely influence you. Study them, but go beyond them, too. Educate yourself and do your own research. Study the platforms, projects, and promises of each candidate before you finally make a decision in May.